Julia Logan | ZANGOOSE.DIGITAL

People often ask what tool is the best for completing this or than SEO task. Of course, you want a tool which gives you accurate data. But even the best tool is only as good as the person using it.

I could give you a long list of tools which we use for our very specific tasks, or we could argue about the pros and cons of various tools. But it’s really about what you do with the output of the tools you are using that makes all the difference. It’s also a good idea to understand all the existing limitations of the tools you’re using as well as (bonus!) be aware of all the possible out-of-the-box uses of said tools, and then you can get more out of the tools you use than other people using the same tools.

Here is one example: Majestic, one of our favourite tools. Traditionally considered a tool for link analysis, it has been a staple in our toolbox for many years. Everybody can use Majestic, everybody gets the same data, the question is though, what do you make out of it?

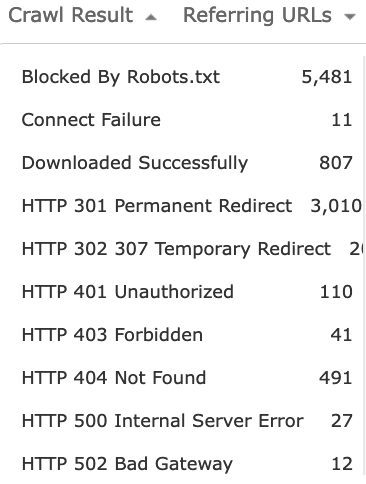

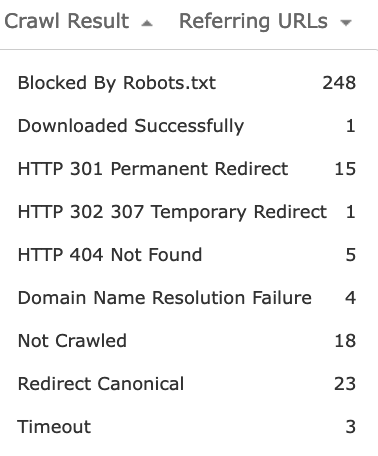

The image above is one of the filters for the pages report in Majestic – Crawl Result. The traditional use for the Pages report is to see what URLs of a site are known to Majestic and have external links (one does not always equal the other), so it’s basically just a list of URLs. But look what a wealth of info I can get from it for this particular site I am looking at if I just slice and dice the data as needed using the filters, ask the right questions and pay attention to the details which stand out in this case. It’s almost like this report alone can guide my SEO audit of the site in question:

- Why are so many pages with external links blocked by robots.txt? Is that the result of some malicious link spam, does the site have an affiliate programme and affiliates are linking to non-canonical URLs which need to be kept out of the search engines - in which case, is there a better way to do it?

- Connect Failure, Internal Server Error, Bad Gateway for pages with external links - are there any serious issues with the site's server? Is the site old and had its architecture changed over time, in which case, does anything need to be done about old pages with links? Same goes for 401, 403 and 404 pages - do they need to be fixed? redirected? do you need to fix the external links to point to something relevant and live on the site?

- Links to URLs with temporary redirects - do those URLs need to become permanent redirects? and again, are you wasting those external links and could they be changed to point at the final destination instead?

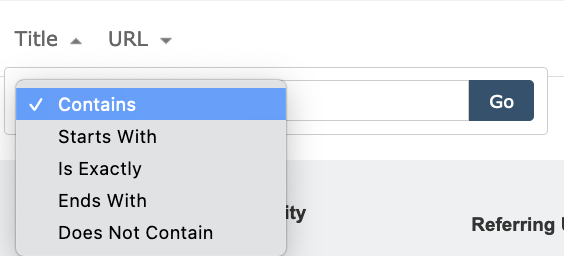

And that's just one little element of one report - here's another: did you know you could detect duplicate titles with Majestic?

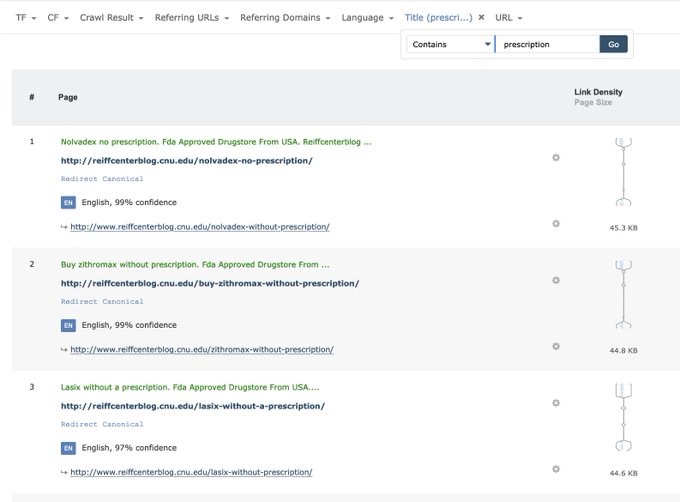

Another use of this filter: detect parasite pages on a hacked site (and not just those that exist now but also those that ever existed on the site).

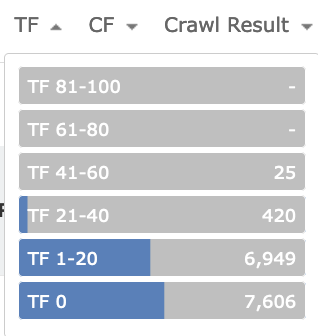

- Or here's a filter which allows you to quickly gauge which URLs on a site have the lowest/highest TF links:

What’s the possible uses for this one? – E.g. content audit – see what content on your site gets the most links, or the most valuable links. Or for pages with extremely low TrustFlow (TF 0) – is there any automated spam linked to those pages? Are the site’s images getting scraped by image scrapers? If the number of such pages is very high, perhaps you’re getting too many low value links – is there anything to be done about it? - Here's another filter which is useful e.g. for quickly finding all URLs with parametres which have external links:

If that by itself does not look particularly useful, you can combine several different filters and e.g. gauge how many of parametred URLs with external links are not redirected or canonicalised properly:

Again, this is just one tool - Majestic, and just one report in it - and you already get access to some data that none of your usual site crawling tools will readily provide you. Of course you will then need to check the site’s Google Search Console and/or Bing Webmaster Tools, and you still need to crawl the site using one of the crawlers, e.g. ScreamingFrog or Sitebulb, to get more information to answer the questions you ask. But questions like those above, arising from a quick glance at this Majestic report are a good starting point to dig deeper and probably end up with a more useful and in-depth site audit than 90% of audits produced both inhouse and by agencies and consultants these days.

This is why generic exported reports just don't cut it as site audits - there is always more to look at and more issues to discover, no matter what tool you use, if you know what you're looking at and what you're looking for. Also, this is why I am not afraid automation or AI will leave me jobless – there is no one scenario for every site out there. A standard scenario can cover the most basic and typical issues. But all sites are different, with a different history and structure and goals and relationships. There are simply too many nuances for each individual site I am auditing requiring a trained human eye and an experienced human brain.